NveEffectKit接入文档

NveEffectKit在美摄sdk 基础上集成了美颜、美型、美妆、妆容、滤镜、人脸道具功能,方便用户集成对纹理或者buffer上处理对应功能,比如对图片进行添加上述效果,或者对直播录制中每一帧(texture或者buffer)上添加效果。NveEffectKit 的依赖库为NvEffectSdkCore(或NvStreamingSdkCore)。 这里以集成了NveEffectKit的NveEffectKitDemo 为例展示接入步骤,该demo 展示的是在拍摄中添加上诉功能,NveEffectKit也支持在编辑中添加上诉功能。

第一步:添加依赖库

NveEffectKit.framework 内部调用了美摄sdk 的接口,所以它本身与其引用的美摄sdk 库是成对匹配的;如果您购买和使用的是NvEffectSdkCore,就需要使用内部引用了NvEffectSdkCore 的NveEffectKit.framework,同理,如果您购买和使用的是NvStreamingSdkCore,那么就需要使用内部引用了NvStreamingSdkCore的NveEffectKit.framework,可以和我们的技术支持同事或者商务同事联系得到对应的匹配的库。

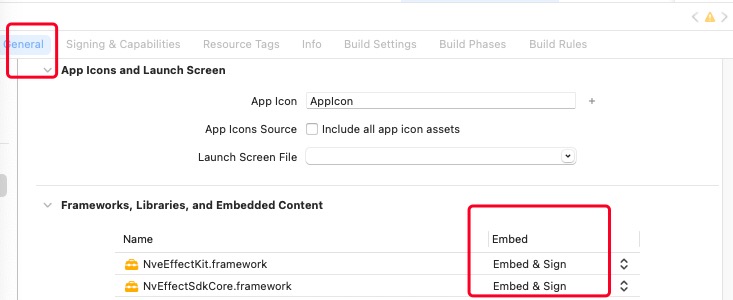

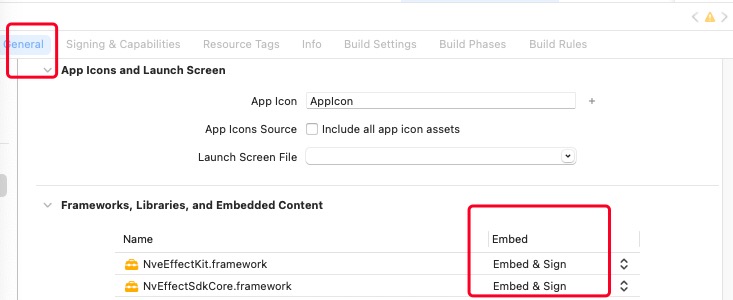

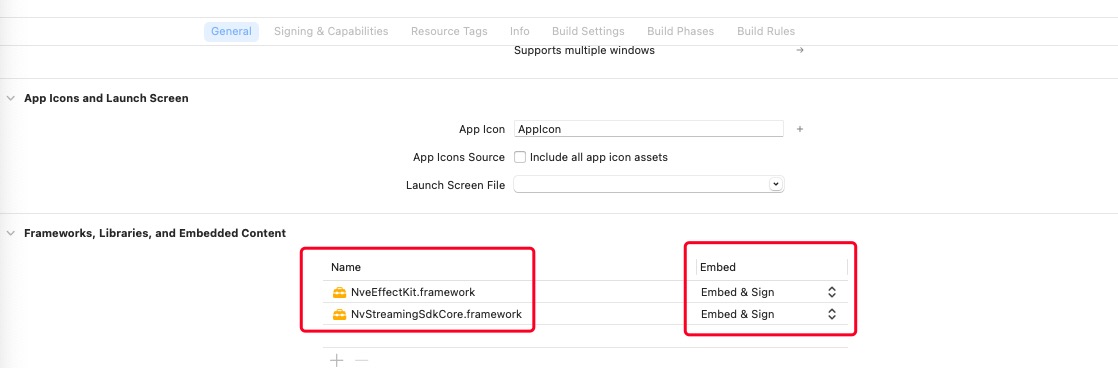

如果您使用的是NvEffectSdkCore,那么添加库如以下图1-1所示

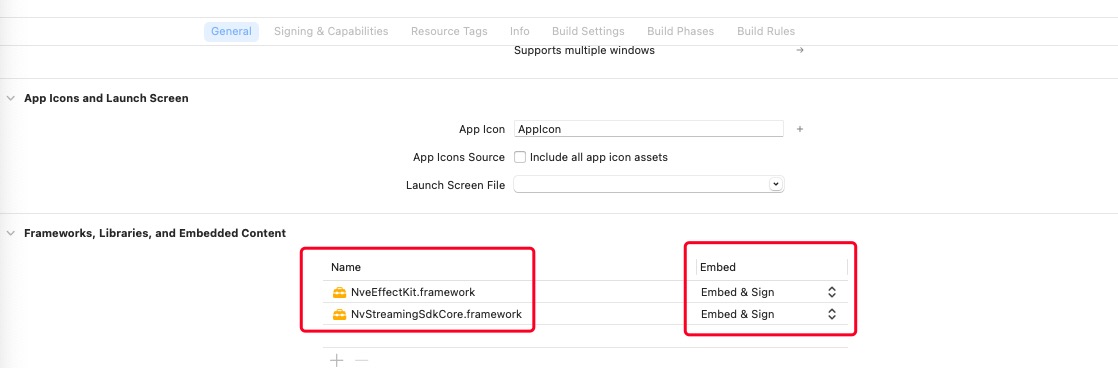

图1-1如果您使用的是NvStreamingSdkCore,那么添加库应该以下图1-2所示

图1-2第二步:sdk 授权

在使用美摄相关内容之前需要调用接口对sdk 进行授权验证,并且只需要验证一次即可,建议在AppDelegate 文件中调用授权验证的接口, 注意,授权接口必须在NveEffectKit创建单例前之前调用。代码如下:

NSString *sdkLicFilePath = [[NSBundle mainBundle] pathForResource:@"meishesdk.lic" ofType:nil];

if (![NveEffectKit verifySdkLicenseFile:sdkLicFilePath]) {

NSLog(@"Sdk License File error");

}

特别注意:美摄SDK授权文件和项目Bundle Identifier绑定,修改Bundle Identifier需要替换对应的授权文件。

第三步:准备要处理的帧数据

这里和sdk 无关,您可以根据您实际项目需求,比如拍摄录制功能或者单张图片等都可以,只要能拿到对应的纹理或者buffer 数据即可。例如拍摄可以用iOS系统自身的AVFoundation,或者可以实现拍摄功能的第三方,在对应的返回帧数据的代理回调中能拿到实时的每一帧的数据即可。 demo 中即是依据AVFoundation实现的拍摄功能,并从AVCaptureVideoDataOutputSampleBufferDelegate中拿到了每一帧的数据回调,代码如下:

- (void)captureOutput:(AVCaptureOutput *)output didOutputSampleBuffer:(CMSampleBufferRef)sampleBuffer fromConnection:(AVCaptureConnection *)connection {

if (self.delegate && [self.delegate respondsToSelector:@selector(captureOutput:didOutputSampleBuffer:fromConnection:isAudioConnection:)]) {

if (connection == _audioConnection) {

[self.delegate captureOutput:output didOutputSampleBuffer:sampleBuffer fromConnection:connection isAudioConnection:YES];

} else {

[self.delegate captureOutput:output didOutputSampleBuffer:sampleBuffer fromConnection:connection isAudioConnection:NO];

}

}

}

第四步:在使用人脸特效前初始化人脸模型

美颜、美型、美妆、妆容、人脸道具都是和人脸相关的特效,所以需要在使用这些特效之前初始化人脸模型,并且只初始化一次即可。初始化之后并不会一次性增长所有人脸模型中的内存,会在实际用到了对应的功能后才会增加内存,这是我们sdk 有关人脸模型的内存优化策略。初始化人脸模型代码如下:

NSString *bundlePath = [[[NSBundle bundleForClass:[self class]] bundlePath] stringByAppendingPathComponent:@"model.bundle"];

NSString *faceModel = [bundlePath stringByAppendingPathComponent:@"ms_face240_v3.0.1.model"];

BOOL ret = [NveEffectKit initHumanDetection:NveDetectionModelType_face modelPath:faceModel licenseFilePath:nil];

if (!ret) {

NSLog(@"init human detect error: face");

}

NSString *fakeFace = [bundlePath stringByAppendingPathComponent:@"fakeface_v1.0.1.dat"];

ret = [NveEffectKit initHumanDetection:NveDetectionModelType_fakeFace modelPath:fakeFace licenseFilePath:nil];

if (!ret) {

NSLog(@"init human detect error: fakeFace");

}

NSString *avatar = [bundlePath stringByAppendingPathComponent:@"ms_avatar_v2.0.0.model"];

ret = [NveEffectKit initHumanDetection:NveDetectionModelType_avatar modelPath:avatar licenseFilePath:nil];

if (!ret) {

NSLog(@"init human detect avatar: avatar");

}

NSString *eyeball = [bundlePath stringByAppendingPathComponent:@"ms_eyecontour_v2.0.0.model"];

ret = [NveEffectKit initHumanDetection:NveDetectionModelType_eyeball modelPath:eyeball licenseFilePath:nil];

if (!ret) {

NSLog(@"init human detect error: eyeball");

}

NSString *facecommon = [bundlePath stringByAppendingPathComponent:@"facecommon_v1.0.1.dat"];

ret = [NveEffectKit initHumanDetection:NveDetectionModelType_face modelPath:facecommon licenseFilePath:nil];

if (!ret) {

NSLog(@"init human detect error: facecommon");

}

NSString *background = [bundlePath stringByAppendingPathComponent:@"ms_humanseg_medium_v2.0.0.model"];

BOOL highVersion = [NvLiveEffectModule isHighVersionPhone];

if (highVersion == NO) {

background = [[[bundlePath stringByAppendingPathComponent:@"license"]stringByAppendingPathComponent:@"ms"] stringByAppendingPathComponent:@"ms_humanseg_small_v2.0.0.model"];

}

ret = [NveEffectKit initHumanDetection:NveDetectionModelType_background modelPath:background licenseFilePath:nil];

if (!ret) {

NSLog(@"init human detect error: background");

}

NSString *hand = [bundlePath stringByAppendingPathComponent:@"ms_hand_v2.0.0.model"];

ret = [NveEffectKit initHumanDetection:NveDetectionModelType_hand modelPath:hand licenseFilePath:nil];

if (!ret) {

NSLog(@"init human detect error: hand");

}

NSString *faceCommon = [bundlePath stringByAppendingPathComponent:@"facecommon_v1.0.1.dat"];

ret = [NveEffectKit initHumanDetection:NveDetectionModelType_faceCommon modelPath:faceCommon licenseFilePath:nil];

if (!ret) {

NSLog(@"init human detect error: faceCommon");

}

NSString *advancedbeauty = [bundlePath stringByAppendingPathComponent:@"advancedbeauty_v1.0.1.dat"];

ret = [NveEffectKit initHumanDetection:NveDetectionModelType_advancedBeauty modelPath:advancedbeauty licenseFilePath:nil];

if (!ret) {

NSLog(@"init human detect error: advancedbeauty");

}

上诉代码中各种检测类型和用途对应表如下

| 检测模型类型 | 名称 | 用途 |

| NveDetectionModelType_face | 人脸 | 人脸模型 |

| NveDetectionModelType_hand | 手势 | 有手势的道具会使用到 |

| NveDetectionModelType_avatar | avatar | avatar道具会使用到 |

| NveDetectionModelType_fakeFace | 假脸 | 人脸道具使用 |

| NveDetectionModelType_eyeball | 眼球 | 眼球模型 |

| NveDetectionModelType_makeup2 | 美妆 | sdk3.13.0版本及之后废弃(包含3.13.0),请使用NveDetectionModelType_faceCommon |

| NveDetectionModelType_background | 背景分割 | 部分人脸道具会用到分割效果 |

| NveDetectionModelType_faceCommon | 人脸通用模型 | sdk3.13.0版本及之后(包含3.13.0),当需要用到人脸相关功能或者美妆,都需要初始化此项 |

| NveDetectionModelType_advancedBeauty | 高级美颜模型 | sdk3.13.0版本及之后(包含3.13.0),开启高级美颜,需要初始化此项 |

注:如果上诉的模型文件在开发过程中缺失,可联系技术支持同事或者商务同事获取。

第五步: 添加特效数据到NveEffectKit单例上

接下来就是添加各种不同类型的特效数据到NveEffectKit单例上,以便下一步将NveEffectKit单例上的特效都渲染到输出纹理或者buffer 上。您需要在您工程内合适的地方添加对应的特效数据到NveEffectKit单例上,例如用户点击添加了一个滤镜,您可以在点击方法中设置对应的滤镜特效到NveEffectKit单例内,由NveEffectKit单例统一管理已添加的特效数据。以下是各个不同种类特效的具体讲解:

美颜

NveBeauty *beauty = [[NveBeauty alloc] init];

// 磨皮

beauty.strength = 1;

[NveEffectKit shareInstance].beauty = beauty;

美颜内部还可以切换美白类型,磨皮类型,调节强度等,可参考下方代码(这是NveBeauty的扩展类方法并不在NveEffectKit.framework中,可在demo 中获取。这是方便实现符合UI 界面操作的对应方法,您也可以根据需求写您自己的方法,以下其他类型特效也都如此,此后不再赘述),和设置strength类似,将操作后的数据全部保存到[NveEffectKit shareInstance].beauty 中。

- (void)updateEffect:(NveBeautyLiveType)type value:(double)value {

switch (type) {

case NveBeautyLiveType_whitening:

self.whitening = value;

[self setEffectParam:@"Whitening Lut Enabled" type:NveParamType_bool value:@(NO)];

[self setEffectParam:@"Whitening Lut File" type:NveParamType_string value:@""];

break;

case NveBeautyLiveType_whitening_1:{

self.whitening = value;

NSString *whitenLutPath = [[[NSBundle bundleForClass:[NvLiveEffectModule class]] bundlePath] stringByAppendingPathComponent:@"whitenLut.bundle"];

NSString *lutPath = [whitenLutPath stringByAppendingPathComponent:@"WhiteB.mslut"];

[self setEffectParam:@"Whitening Lut Enabled" type:NveParamType_bool value:@(YES)];

[self setEffectParam:@"Whitening Lut File" type:NveParamType_string value:lutPath];

}

break;

case NveBeautyLiveType_reddening:

self.reddening = value;

break;

case NveBeautyLiveType_strength:

self.strength = value;

[self setEffectParam:@"Advanced Beauty Intensity" type:NveParamType_float value:@(0)];

[self setEffectParam:@"Beauty Strength" type:NveParamType_float value:@(value)];

break;

case NveBeautyLiveType_strength_1:

self.strength = value;

[self setEffectParam:@"Advanced Beauty Enable" type:NveParamType_bool value:@(YES)];

[self setEffectParam:@"Advanced Beauty Type" type:NveParamType_int value:@(0)];

[self setEffectParam:@"Beauty Strength" type:NveParamType_float value:@(0)];

[self setEffectParam:@"Advanced Beauty Intensity" type:NveParamType_float value:@(value)];

break;

case NveBeautyLiveType_strength_2:

self.strength = value;

[self setEffectParam:@"Advanced Beauty Enable" type:NveParamType_bool value:@(YES)];

[self setEffectParam:@"Advanced Beauty Type" type:NveParamType_int value:@(1)];

[self setEffectParam:@"Beauty Strength" type:NveParamType_float value:@(0)];

[self setEffectParam:@"Advanced Beauty Intensity" type:NveParamType_float value:@(value)];

break;

case NveBeautyLiveType_strength_3:

self.strength = value;

[self setEffectParam:@"Advanced Beauty Enable" type:NveParamType_bool value:@(YES)];

[self setEffectParam:@"Advanced Beauty Type" type:NveParamType_int value:@(2)];

[self setEffectParam:@"Beauty Strength" type:NveParamType_float value:@(0)];

[self setEffectParam:@"Advanced Beauty Intensity" type:NveParamType_float value:@(value)];

break;

case NveBeautyLiveType_matte:

self.matte = value;

break;

case NveBeautyLiveType_sharpen:

self.sharpen = value;

break;

case NveBeautyLiveType_definition:

self.definition = value;

break;

}

}

美型

NveShape *shape = [[NveShape alloc] init];

// 瘦脸

shape.faceWidth = 1;

[NveEffectKit shareInstance].shape = beauty;

美型除了瘦脸效果还有更多的美型效果,这里不一一列举,可在代码中查看注释。这里需要注意的一点是,美型都是package 包类型的素材,每一种类型的美型效果都可以有多个不同的包文件实现不同的算法效果(比如大眼效果可以有多个不同算法实现的大眼效果素材包),所以需要进行素材安装以及安装成功后设置素材uuid 到指定效果上。素材安装可在随后的小节中查看实现逻辑,这里列出设置uuid 的代码以及设置各个效果属性值大小的代码,在NveShape 类的扩展方法中可添加如下代码(这是NveShape的扩展类方法并不在NveEffectKit.framework中,可在demo 中获取),这样在合适的地方进行调用,并保存相关数据到[NveEffectKit shareInstance].shape中。

- (void)updateEffect:(NveShapeLiveType)type packageId:(NSString *)packageId {

if (!packageId) {

return;

}

switch (type) {

case NveShapeLiveType_faceWidth:

self.faceWidthPackageId = packageId;

break;

case NveShapeLiveType_faceLength:

self.faceLengthPackageId = packageId;

break;

case NveShapeLiveType_faceSize:

self.faceSizePackageId = packageId;

break;

case NveShapeLiveType_foreheadHeight:

self.foreheadHeightPackageId = packageId;

break;

case NveShapeLiveType_chinLength:

self.chinLengthPackageId = packageId;

break;

case NveShapeLiveType_eyeSize:

self.eyeSizePackageId = packageId;

break;

case NveShapeLiveType_eyeCornerStretch:

self.eyeCornerStretchPackageId = packageId;

break;

case NveShapeLiveType_noseWidth:

self.noseWidthPackageId = packageId;

break;

case NveShapeLiveType_noseLength:

self.noseLengthPackageId = packageId;

break;

case NveShapeLiveType_mouthSize:

self.mouthSizePackageId = packageId;

break;

case NveShapeLiveType_mouthCornerLift:

self.mouthCornerLiftPackageId = packageId;

break;

}

}

设置数值代码如下

- (void)updateEffect:(NveShapeLiveType)type value:(double)value {

switch (type) {

case NveShapeLiveType_faceWidth:

self.faceWidth = value;

break;

case NveShapeLiveType_faceLength:

self.faceLength = value;

break;

case NveShapeLiveType_faceSize:

self.faceSize = value;

break;

case NveShapeLiveType_foreheadHeight:

self.foreheadHeight = value;

break;

case NveShapeLiveType_chinLength:

self.chinLength = value;

break;

case NveShapeLiveType_eyeSize:

self.eyeSize = value;

break;

case NveShapeLiveType_eyeCornerStretch:

self.eyeCornerStretch = value;

break;

case NveShapeLiveType_noseWidth:

self.noseWidth = value;

break;

case NveShapeLiveType_noseLength:

self.noseLength = value;

break;

case NveShapeLiveType_mouthSize:

self.mouthSize = value;

break;

case NveShapeLiveType_mouthCornerLift:

self.mouthCornerLift = value;

break;

}

}

微整形

微整形比较特殊,其中既有需要进行安装的素材也有内置的素材,但是实现逻辑上和其他类型也都遵循相同的原则

NveMicroShape *microShape = [[NveMicroShape alloc] init];

// 缩头

microShape.headSize = 1;

[NveEffectKit shareInstance].microShape = microShape;

设置需要package 包素材uuid到[NveEffectKit shareInstance].microShape 上(这是NveMicroShape的扩展类方法并不在NveEffectKit.framework中,可在demo 中获取),代码如下

- (void)updateEffect:(NveMicroShapeLiveType)type packageId:(NSString *)packageId {

switch (type) {

case NveMicroShapeLiveType_headSize:

self.headSizePackageId = packageId;

break;

case NveMicroShapeLiveType_malarWidth:

self.malarWidthPackageId = packageId;

break;

case NveMicroShapeLiveType_jawWidth:

self.jawWidthPackageId = packageId;

break;

case NveMicroShapeLiveType_templeWidth:

self.templeWidthPackageId = packageId;

break;

case NveMicroShapeLiveType_removeNasolabialFolds:

case NveMicroShapeLiveType_removeDarkCircles:

case NveMicroShapeLiveType_brightenEyes:

case NveMicroShapeLiveType_whitenTeeth:

// Unsupport custom effect packs

break;

case NveMicroShapeLiveType_eyeDistance:

self.eyeDistancePackageId = packageId;

break;

case NveMicroShapeLiveType_eyeAngle:

self.eyeAnglePackageId = packageId;

break;

case NveMicroShapeLiveType_philtrumLength:

self.philtrumLengthPackageId = packageId;

break;

case NveMicroShapeLiveType_noseBridgeWidth:

self.noseBridgeWidthPackageId = packageId;

break;

case NveMicroShapeLiveType_noseHeadWidth:

self.noseHeadWidthPackageId = packageId;

break;

case NveMicroShapeLiveType_eyebrowThickness:

self.eyebrowThicknessPackageId = packageId;

break;

case NveMicroShapeLiveType_eyebrowAngle:

self.eyebrowAnglePackageId = packageId;

break;

case NveMicroShapeLiveType_eyebrowXOffset:

self.eyebrowXOffsetPackageId = packageId;

break;

case NveMicroShapeLiveType_eyebrowYOffset:

self.eyebrowYOffsetPackageId = packageId;

break;

case NveMicroShapeLiveType_eyeWidth:

self.eyeWidthPackageId = packageId;

break;

case NveMicroShapeLiveType_eyeHeight:

self.eyeHeightPackageId = packageId;

break;

case NveMicroShapeLiveType_eyeArc:

self.eyeArcPackageId = packageId;

break;

case NveMicroShapeLiveType_eyeYOffset:

self.eyeYOffsetPackageId = packageId;

break;

}

}

设置属性大小值方法如下

- (void)updateEffect:(NveMicroShapeLiveType)type value:(double)value {

switch (type) {

case NveMicroShapeLiveType_headSize:

self.headSize = value;

break;

case NveMicroShapeLiveType_malarWidth:

self.malarWidth = value;

break;

case NveMicroShapeLiveType_jawWidth:

self.jawWidth = value;

break;

case NveMicroShapeLiveType_templeWidth:

self.templeWidth = value;

break;

case NveMicroShapeLiveType_removeNasolabialFolds:

self.removeNasolabialFolds = value;

break;

case NveMicroShapeLiveType_removeDarkCircles:

self.removeDarkCircles = value;

break;

case NveMicroShapeLiveType_brightenEyes:

self.brightenEyes = value;

break;

case NveMicroShapeLiveType_whitenTeeth:

self.whitenTeeth = value;

break;

case NveMicroShapeLiveType_eyeDistance:

self.eyeDistance = value;

break;

case NveMicroShapeLiveType_eyeAngle:

self.eyeAngle = value;

break;

case NveMicroShapeLiveType_philtrumLength:

self.philtrumLength = value;

break;

case NveMicroShapeLiveType_noseBridgeWidth:

self.noseBridgeWidth = value;

break;

case NveMicroShapeLiveType_noseHeadWidth:

self.noseHeadWidth = value;

break;

case NveMicroShapeLiveType_eyebrowThickness:

self.eyebrowThickness = value;

break;

case NveMicroShapeLiveType_eyebrowAngle:

self.eyebrowAngle = value;

break;

case NveMicroShapeLiveType_eyebrowXOffset:

self.eyebrowXOffset = value;

break;

case NveMicroShapeLiveType_eyebrowYOffset:

self.eyebrowYOffset = value;

break;

case NveMicroShapeLiveType_eyeWidth:

self.eyeWidth = value;

break;

case NveMicroShapeLiveType_eyeHeight:

self.eyeHeight = value;

break;

case NveMicroShapeLiveType_eyeArc:

self.eyeArc = value;

break;

case NveMicroShapeLiveType_eyeYOffset:

self.eyeYOffset = value;

break;

}

}

这样就可以把和微整形相关的数据都设置到了[NveEffectKit shareInstance].microShape 中

美妆

这里指后缀名为.makeup 文件,是单个美妆文件,同样需要安装素材文件和指定packgeId。

NveMakeup *makeup = [NveEffectKit shareInstance].makeup;

if (!makeup) {

makeup = [[NveMakeup alloc] init];

[NveEffectKit shareInstance].makeup = makeup;

}

[makeup updateEffect:effectModel.effectType packageId:effectModel.uuid];

[makeup updateEffect:effectModel.effectType value:effectModel.value];

其中调用了的两个方法是NveMakeup的扩展类方法,并不在NveEffectKit.framework中,可在demo 中获取,分别为

- (void)updateEffect:(NveMakeupLiveType)type packageId:(NSString *)packageId {

switch (type) {

case NveMakeupLiveType_lip:

self.lipPackageId = packageId;

break;

case NveMakeupLiveType_eyeshadow:

self.eyeshadowPackageId = packageId;

break;

case NveMakeupLiveType_eyebrow:

self.eyebrowPackageId = packageId;

break;

case NveMakeupLiveType_eyelash:

self.eyelashPackageId = packageId;

break;

case NveMakeupLiveType_eyeliner:

self.eyelinerPackageId = packageId;

break;

case NveMakeupLiveType_blusher:

self.blusherPackageId = packageId;

break;

case NveMakeupLiveType_brighten:

self.brightenPackageId = packageId;

break;

case NveMakeupLiveType_shadow:

self.shadowPackageId = packageId;

break;

case NveMakeupLiveType_eyeball:

self.eyeballPackageId = packageId;

break;

}

}

- (void)updateEffect:(NveMakeupLiveType)type value:(double)value {

switch (type) {

case NveMakeupLiveType_lip:

self.lip = value;

break;

case NveMakeupLiveType_eyeshadow:

self.eyeshadow = value;

break;

case NveMakeupLiveType_eyebrow:

self.eyebrow = value;

break;

case NveMakeupLiveType_eyelash:

self.eyelash = value;

break;

case NveMakeupLiveType_eyeliner:

self.eyeliner = value;

break;

case NveMakeupLiveType_blusher:

self.blusher = value;

break;

case NveMakeupLiveType_brighten:

self.brighten = value;

break;

case NveMakeupLiveType_shadow:

self.shadow = value;

break;

case NveMakeupLiveType_eyeball:

self.eyeball = value;

break;

}

}

还可以设置颜色,代码如下

- (void)updateEffect:(NveMakeupLiveType)type color:(UIColor *)color {

switch (type) {

case NveMakeupLiveType_lip:

self.lipColor = color;

break;

case NveMakeupLiveType_eyeshadow:

self.eyeshadowColor = color;

break;

case NveMakeupLiveType_eyebrow:

self.eyebrowColor = color;

break;

case NveMakeupLiveType_eyelash:

self.eyelashColor = color;

break;

case NveMakeupLiveType_eyeliner:

self.eyelinerColor = color;

break;

case NveMakeupLiveType_blusher:

self.blusherColor = color;

break;

case NveMakeupLiveType_brighten:

self.brightenColor = color;

break;

case NveMakeupLiveType_shadow:

self.shadowColor = color;

break;

case NveMakeupLiveType_eyeball:

self.eyeballColor = color;

break;

}

}

同样,需要将美妆相关数据保存到[NveEffectKit shareInstance].makeup中。

妆容

妆容是一个文件夹内组织了多种类型的特效,比如有美妆效果,美型效果,美颜效果等,按照指定规则以json 文件规定好各个效果的属性值(比如强度值,眼睛大小,口红强度等),然后统一使用的整体效果,并且应用或移除妆容时会更新美颜、美型、微整形、美妆数据。妆容只需要传入对应妆容解压后文件夹的路径即可。

// packagePath 妆容包路径

NveComposeMakeup *composeMakeup = [NveComposeMakeup composeMakeupWithPackagePath:packagePath];

[NveEffectKit shareInstance].composeMakeup = composeMakeup;

滤镜

NvFilter *filter = [NvFilter filterWithEffectId:packageId];

// 调节强度

filter.intensity = 0.5;

NvFilterContainer *filterContainer = [NveEffectKit shareInstance].filterContainer;

// 添加

[filterContainer append:filter];

// 移除

[filterContainer remove:filter];

支持内建滤镜列表:https://www.meishesdk.com/ios/doc_ch/html/content/FxNameList_8md.html 或者使用滤镜包,如:0FBCC8A1-C16E-4FEB-BBDE-D04B91D98A40.1.videofx 滤镜包需要安装获取packageId,即这个滤镜包的uuid.

人脸道具

人脸道具安装素材后获取packageId,然后根据packageId 创建NveFaceProp对象,将NveFaceProp对象设置给[NveEffectKit shareInstance].prop,这样该道具的数据就记录在[NveEffectKit shareInstance].prop中了。

NveFaceProp *prop = [NveFaceProp propWithPackageId:packageId];

[NveEffectKit shareInstance].prop = prop;

现在,从美颜到人脸道具,各个特效的数据就都保存到了[NveEffectKit shareInstance]单例之中。下面就可以着手准备被渲染的数据以及渲染之前的配置工作了。

第六步:配置NveEffectKit渲染输入数据– NveRenderInput

NveRenderInput 是给[NveEffectKit shareInstance]单例的输入数据,包含要处理的图像数据(纹理或者buffer)、输入输出的配置,比如输入纹理输出纹理、输入buffer输出buffer、输入buffe输出纹理模式的选择。 其中数据结构的定义如下

@interface NveRenderInput : NSObject

@property(nonatomic, strong) NveTexture *_Nullable texture; //!< \if ENGLISH Texture \else 输入的纹理 \endif

/*! \if ENGLISH Input pixelBuffer

\else 输入的pixelBuffer

\endif

*/

@property(nonatomic, strong) NveImageBuffer *_Nullable imageBuffer;

@property(nonatomic, strong) NveRenderConfig *config; //!< \if ENGLISH I/o configuration \else 输入输出配置 \endif

@end

@interface NveRenderConfig : NSObject

/// 渲染模式

@property(nonatomic, assign) NveRenderMode renderMode;

/// 输出纹理方向

@property(nonatomic, assign) NveTextureLayout outputTextureLayout;

/// 输出Buffer格式, .none: 不指定,和输入保持一致

@property(nonatomic, assign) NvePixelFormatType outputFormatType;

/// 输出到输入Buffer

@property(nonatomic, assign) BOOL overlayInputBuffer;

// 当前图像是否来源于前置摄像头,默认为 NO

@property(nonatomic, assign) BOOL isFromFrontCamera;

@property(nonatomic, assign) BOOL autoMotion;

@end

typedef NS_ENUM(NSInteger, NveRenderMode) {

NveRenderMode_texture_texture,

NveRenderMode_buffer_texture,

NveRenderMode_buffer_buffer

};

其中对输入输出模式的配置,可参考下表

| NveRenderMode_texture_texture | 渲染纹理 | 输入中texture不能为空 |

| NveRenderMode_buffer_texture | 输入CVPixelBufferRef,输出纹理 | 输入中imageBuffer不能为空 |

| NveRenderMode_buffer_buffer | 输入CVPixelBufferRef,输出也是CVPixelBufferRef | 输入中imageBuffer不能为空 |

根据选择的输入输出模式,将获取的帧数据(如从AVFoundation 拍摄回调方法中获取的数据)以合适的形式传给NveRenderInput 中对应的属性,以NveRenderMode_buffer_buffer为例

- (void)captureOutput:(AVCaptureOutput *)output didOutputSampleBuffer:(CMSampleBufferRef)sampleBuffer fromConnection:(AVCaptureConnection *)connection isAudioConnection:(BOOL)isAudioConnection {

//和本处讲解无关的代码忽略,可在demo 中查看全部代码

if (isAudioConnection) {

[self.fileWriter appendAudioSampleBuffer:sampleBuffer];

} else {

EAGLContext *currentContext = [EAGLContext currentContext];

if (currentContext != self.glContext) {

[EAGLContext setCurrentContext:self.glContext];

}

// Render

CVPixelBufferRef pixelBuffer = CMSampleBufferGetImageBuffer(sampleBuffer);

if (pixelBuffer == nil) {

return;

}

NveRenderInput *input = [[NveRenderInput alloc] init];

// 填数据

NveImageBuffer *imageBuffer = [[NveImageBuffer alloc] init];

imageBuffer.pixelBuffer = pixelBuffer;

input.imageBuffer = imageBuffer;

input.imageBuffer.mirror = self.capture.position == AVCaptureDevicePositionFront;

// 渲染模式

input.config.renderMode = self.renderMode;

//和本处讲解无关的代码忽略,可在demo 中查看全部代码

}

//和本处讲解无关的代码忽略,可在demo 中查看全部代码

}

第七步:渲染

经过前面步骤的执行,现在[NveEffectKit shareInstance]单例已经有了所有需要渲染的特效的数据,NveRenderInput中也有了输入的图像数据以及输入输出配置方式,现在要做的就是将NveRenderInput给到[NveEffectKit shareInstance]单例在图像上渲染已知的特效,并拿到渲染后的输出NveRenderOutput。 代码如下:

- (void)captureOutput:(AVCaptureOutput *)output didOutputSampleBuffer:(CMSampleBufferRef)sampleBuffer fromConnection:(AVCaptureConnection *)connection isAudioConnection:(BOOL)isAudioConnection {

//和本处讲解无关的代码忽略,可在demo 中查看全部代码

// Render / 渲染

NveRenderOutput *output = [[NveEffectKit shareInstance] renderEffect:input];

if (output.errorCode != NveRenderError_noError) {

[self.effectKit recycleOutput:output];

return;

}

//和本处讲解无关的代码忽略,可在demo 中查看全部代码

}

其中,输出数据结构为

@interface NveRenderOutput : NSObject

@property(nonatomic, assign) NveRenderError errorCode;

@property(nonatomic, strong) NveTexture *texture;

@property(nonatomic, assign) CVPixelBufferRef _Nullable pixelBuffer;

@end

根据渲染后的输出是否有问题,决定抛弃该输出或者在您的屏幕上进行显示和保存到文件中(录制)。以下是demo中的示例代码,您可以当作伪代码来理解,实际工程中按照您的具体需求去执行相应的逻辑。

// Display

// 显示

CMTime presentationTimeStamp = CMSampleBufferGetPresentationTimeStamp(sampleBuffer);

CMTime duration = CMSampleBufferGetDuration(sampleBuffer);

[self.preview displayRenderOutput:output presentationTimeStamp:presentationTimeStamp duration:duration];

// write file

// 写入到文件中

CMTime bufferTime = CMSampleBufferGetPresentationTimeStamp(sampleBuffer);

int64_t timelinePos = (int64_t)(bufferTime.value / 1000);

if (output.pixelBuffer != nil) {

[self.fileWriter appendPixelBuffer:output.pixelBuffer timelinePos:timelinePos];

} else {

[self.fileWriter appendTexture:output.texture.textureId videoSize:output.texture.size timelinePos:timelinePos];

}

在处理完输出数据后(如显示、保存)需要将不再使用的输出给销毁掉,回收由NveEffectKit创建的CVPixelBufferRef或纹理,代码如下

- (void)captureOutput:(AVCaptureOutput *)output didOutputSampleBuffer:(CMSampleBufferRef)sampleBuffer fromConnection:(AVCaptureConnection *)connection isAudioConnection:(BOOL)isAudioConnection {

//和本处讲解无关的代码忽略,可在demo 中查看全部代码

// recycle / 回收释放资源

[self.effectKit recycleOutput:output];

}

素材安装

sdk 内部所有以文件形式存在的素材包都需要在使用前进行安装,代码如下:

NSString* packagePath = @"XXXX"; // 效果包路径

NSString* packageLicPath = @"XXXX"; // 效果包对应的lic文件路径

NSMutableString *mutString = [NSMutableString string];

NvsAssetPackageManagerError error =

[[NveEffectKit shareInstance] installAssetPackage:packagePath

license:packageLicPath

type:NvsAssetPackageType_VideoFx

assetPackageId:mutString];

if (error == NvsAssetPackageManagerError_NoError) {

model.packageId = mutString;

} else {

NSLog(@"installAssetPackage error: %d", error);

}

注意,每个素材(效果包)文件都有一个对应的证书文件,一定要传入该素材对应的证书文件地址。type 是该素材对应的类型,具体枚举可查看 NvsAssetPackageType。

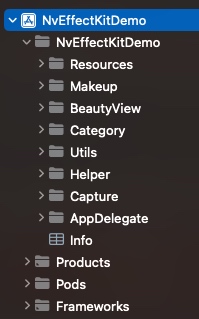

至此,相信您已经成功集成,下面是对demo 的文件的大致说明,帮助您快速了解demo 架构。

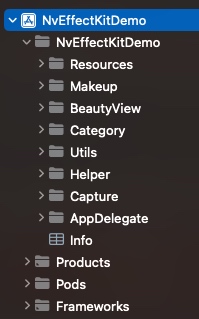

demo 中文件和类的大致说明

| 文件夹 | |

| Resources | 资源(人脸模型、效果包、SDK授权等) |

| Makeup | 美妆和妆容视图和模型 |

| BeautyView | 美颜、美型、微整形视图和模型 |

| Category | 类扩展 |

| Utils | 工具类 |

| Helper | 拍摄、预览、录制功能类 |

| Capture | 拍摄页面控制器 |

| AppDelegate | 项目类 |

| 类 | 功能 |

| ----------— | ---— |

| EFCapture | 相机管理 |

| NvDisplayView | 预览画面 |

| EFFileWriter | 视频文件录制 |

1.8.18

1.8.18