Module structure

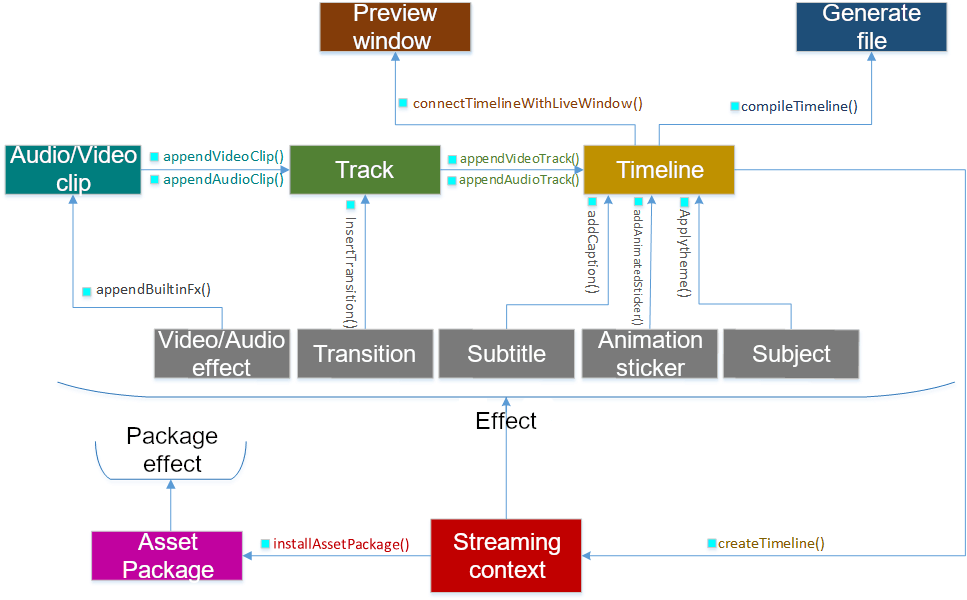

Meishe SDK core module includes streaming context, timeline, caption, animated sticker, track, audio/video clip, etc.

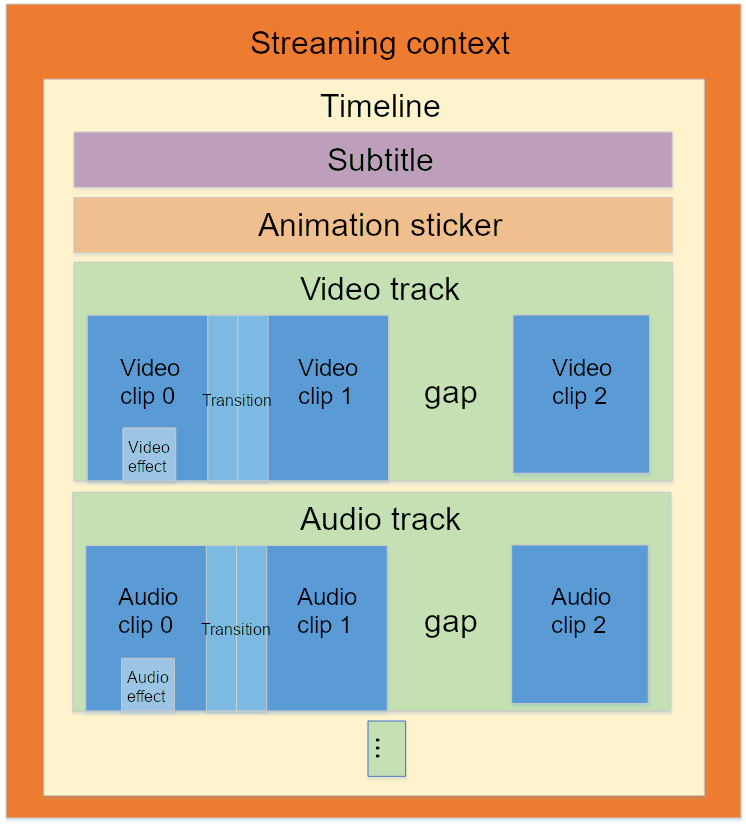

Streaming context is the most basic module in the SDK. It is for generating, saving, and maintaining the context when the SDK engine is running. The timeline is generated by the streaming context and contains captions, animated stickers, multiple audio and video tracks, each of which works together to produce the final video effect. The audio/video tracks can be added with several audio/video clips, and various effects can be added to the clip. Different transition effects can be set between the clips.

Data stream

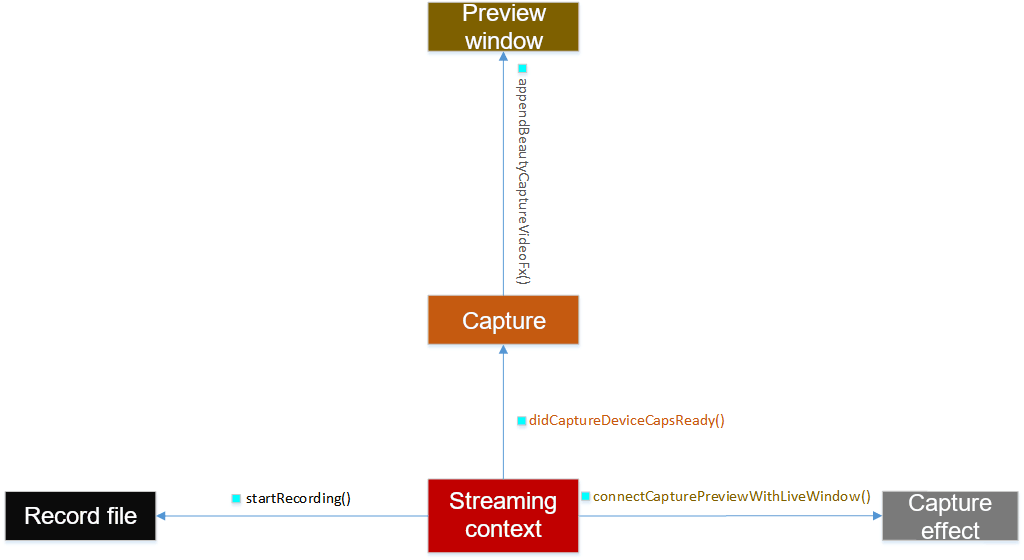

Meishe SDK supports two types of media input: standard media file and camera capture.

When the input is single or multiple media files, the SDK firstly extracts the corresponding audio and video clips from the file, which can be regard as the clip and encapsulation of the original audio and video stream. Taking video as an example, during the editing process, users can splice video clips onto the corresponding track as needed, and the track is then placed on the timeline.Each track can be regarded as a layer. The order of rendering tracks of the SDK engine is bottom-up. Each layer can achieve special effects by setting parameters such as opacity. The SDK engine supports adding effects to timelines, tracks, and audio/video clips. The effects include audio/video effects, transitions, captions, animated stickers, and themes. And a theme can be thought of as a closure for the above effects.The SDK provides several builtin editing effects by default. More special effect packages can be downloaded from Meishe Website. The edited video can be previewed through the live window provided by the SDK, or directly compile media file.

When using the camera as an input device, the SDK engine can only add capture effects and package effects on it. However, transitions, captions, animated stickers and other special effects cannot be used. The generated video can also be previewed through the live window provided by the SDK, or directly compile media files.

1.8.18

1.8.18