Meishe SDK License Description

StreamingSDK can be used without authorization during testing, but a watermark will be present on the output video.

EffectSDK requires both an SDK trial authorization and asset authorizations during the testing phase; otherwise, the effects will not be applied.

I. SDK Authorization Application Process:

1.Open the official website en.meishesdk.com and register a user account.

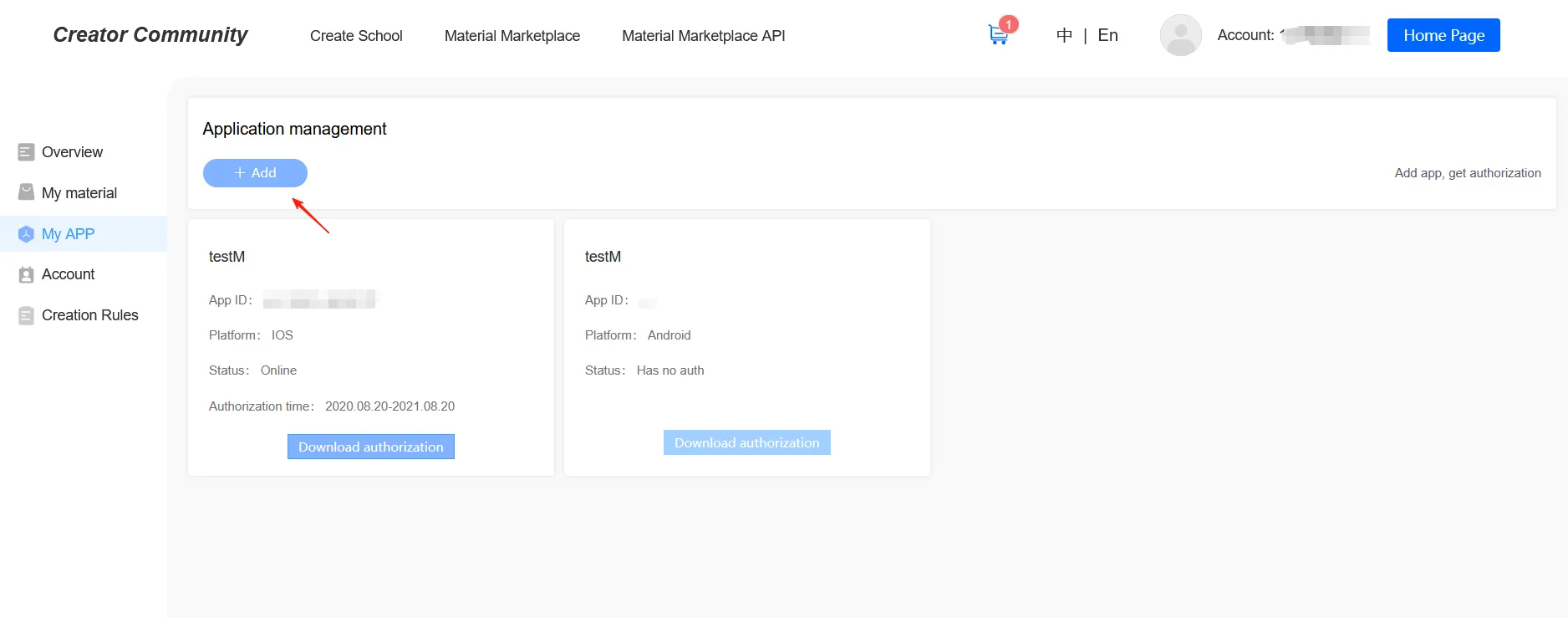

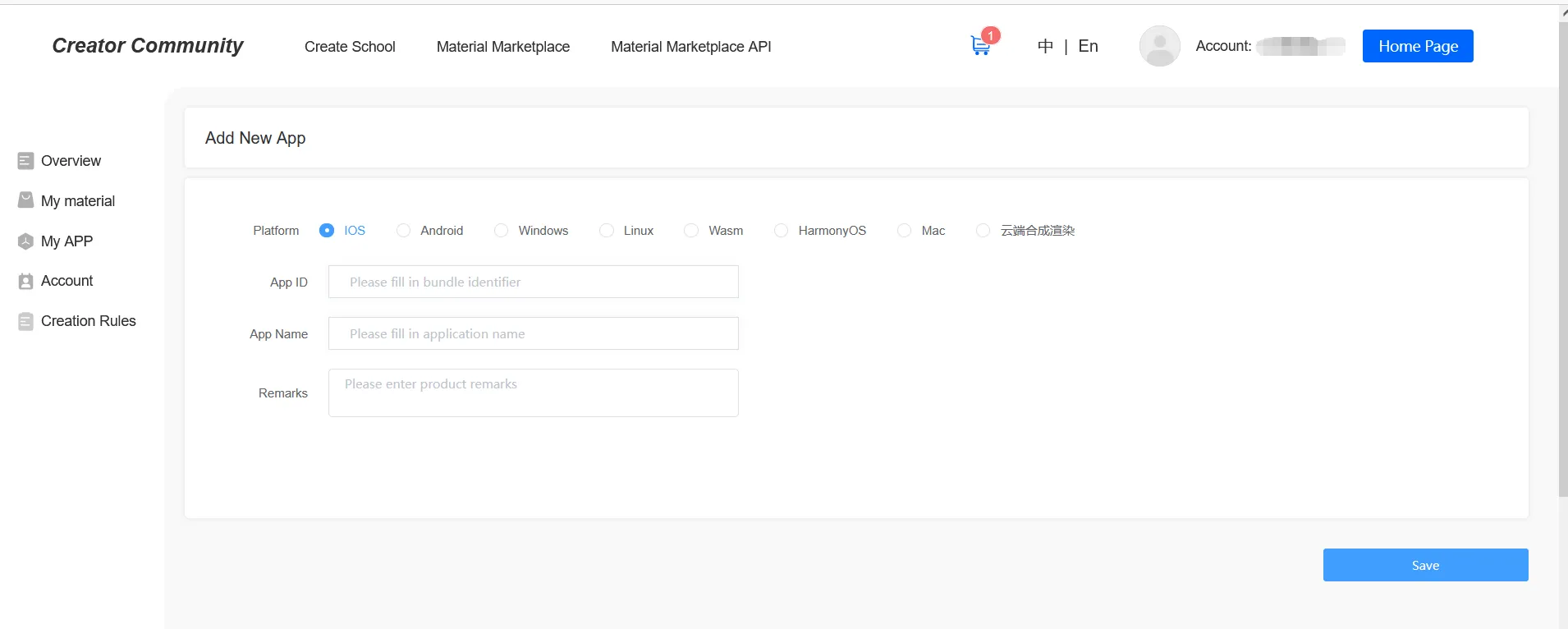

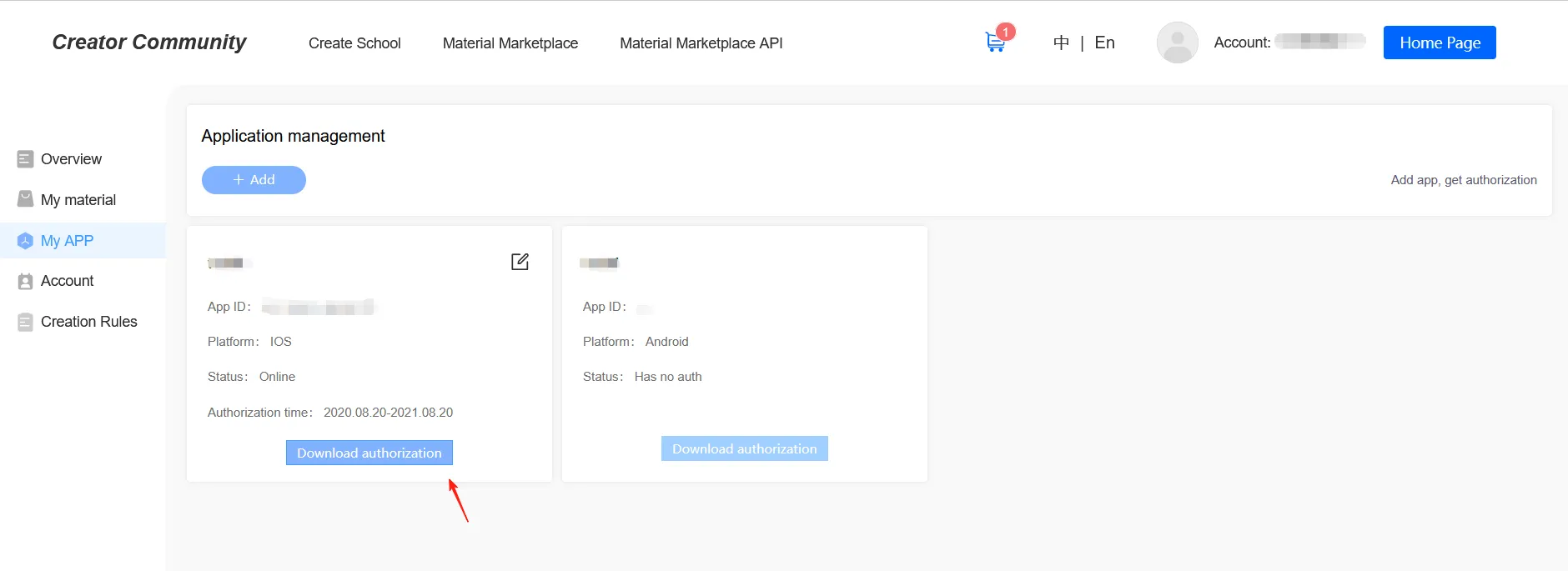

2.Enter the user backend, create a product in the Application Management section, and fill in the product's bundle id and package name. (If this is intended for formal authorization later, please use a business master account rather than a personal account to avoid migration hassles in the future.)

3.Contact business personnel for authorization, which is primarily based on the SDK functionalities required by the user. After authorization, you can download the license for the product you just created.

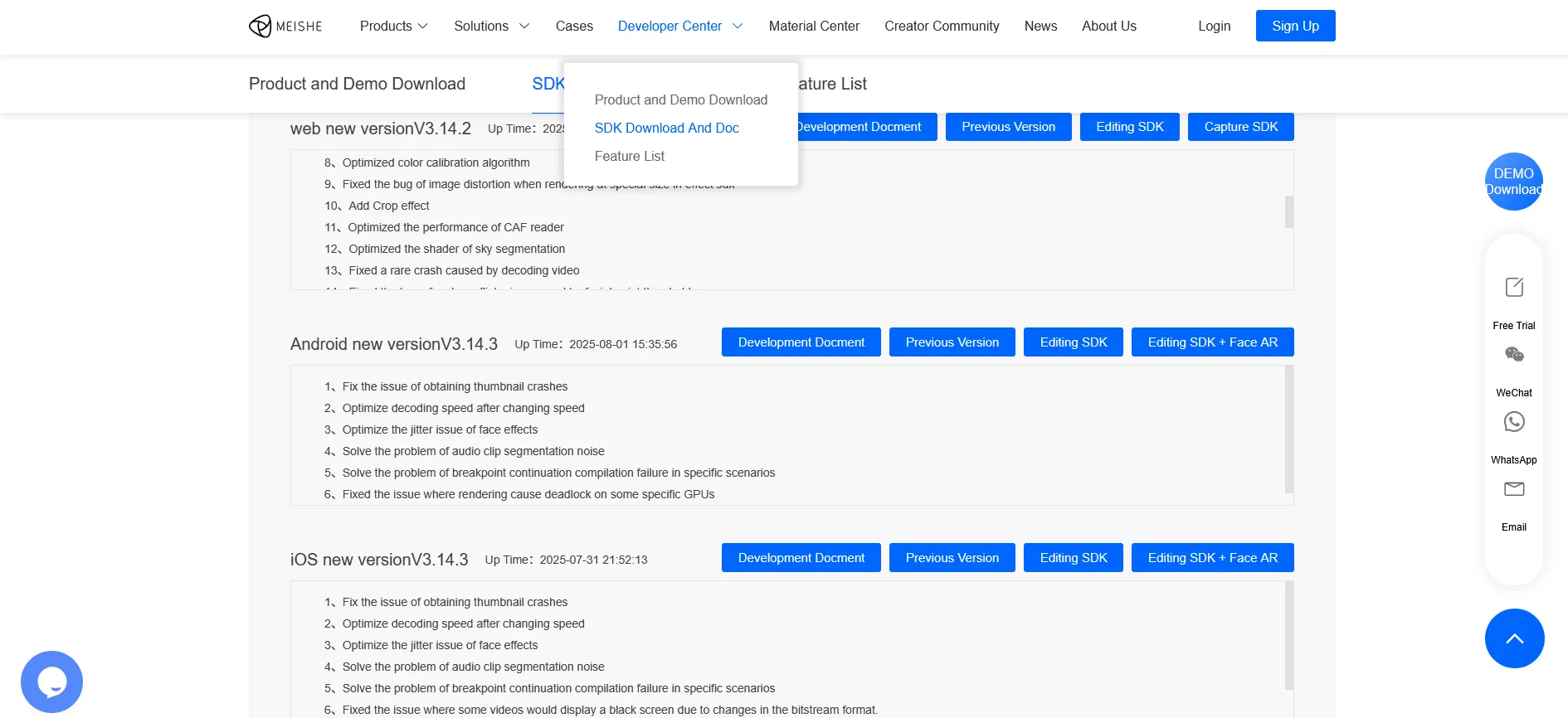

- Obtain the SDK package: The latest Meishe SDK package can be obtained on the en.meishesdk.com website. If facial recognition features are needed, please download the SDK package that includes the face module.

II.Methods for Using SDK Authorization on Various Platforms

*Important: The SDK license file cannot be used for asset authorization.

2.1 iOS License Usage Method

NSString *licPath = [[[NSBundle mainBundle] bundlePath] stringByAppendingPathComponent:@"license/meishesdk.lic"];

BOOL result = [NvsStreamingContext verifySdkLicenseFile:licPath];

// If 4K support is not required or this license hasn't been purchased, do not add this flag: NvsStreamingContextFlag_Support4KEdit

[NvsStreamingContext sharedInstanceWithFlags:NvsStreamingContextFlag_Support4KEdit];

if (!result) {

NSLog(@"Meishe SDK license verification failed!");

}Facial recognition licensing requires 3 pieces of information:

1.License path

2.Model path

3.facecommon.dat path

The code is as follows, execute the authorization only once.

NSString *bundleid = [[NSBundle mainBundle] bundleIdentifier];

NSString *licPath = nil;

NSString *bundlePath = [[NSBundle mainBundle] pathForResource:@"license" ofType:@"bundle"];

// Copy the model from the SDK demo project; the SDK demo always has the latest models. Ensure the model path is correct.

NSString *modelPath = [[[bundlePath stringByAppendingPathComponent:@"license"] stringByAppendingPathComponent:@"ms"] stringByAppendingPathComponent:@"ms_face106_v3.0.0.model"];

if (model == NvFaceMode_240){

modelPath = [[[bundlePath stringByAppendingPathComponent:@"license"] stringByAppendingPathComponent:@"ms"] stringByAppendingPathComponent:@"ms_face240_v3.0.1.model"];

}

// Check if the SDK includes face functionality (this does not necessarily mean you have the license for it)

int type = [NvsStreamingContext hasARModule];

if (type > 0) {

isInitArFaceSuccess = [NvsStreamingContext initHumanDetection:modelPath licenseFilePath:licPath features:NvsHumanDetectionFeature_FaceLandmark|NvsHumanDetectionFeature_FaceAction | NvsHumanDetectionFeature_SemiImageMode];

if(isInitArFaceSuccess) {

NSLog(@"Face initialization successful!!!!! Initialize face successfully !!!!!");

}else{

NSLog(@"Face initialization failed!!!!! Initialize face Failed !!!!!");

}

/// Initialize the general face model. This model needs to be initialized when using the face function.

NSString *facecommonPath = [[[bundlePath stringByAppendingPathComponent:@"license"]stringByAppendingPathComponent:@"ms"] stringByAppendingPathComponent:@"facecommon_v1.0.1.dat"];

[NvsStreamingContext setupHumanDetectionData:NvsHumanDetectionDataType_FaceCommon dataFilePath:facecommonPath];

// If you want to use the segmentation function, include this model. For older, less powerful phones, please use the small version.

NSString *segFilePath = [[[bundlePath stringByAppendingPathComponent:@"license"]stringByAppendingPathComponent:@"ms"] stringByAppendingPathComponent:@"ms_humanseg_medium_v2.0.0.model"];

BOOL highVersion = [NvInitArScence isHighVersionPhone];

if (highVersion == NO) {

segFilePath = [[[bundlePath stringByAppendingPathComponent:@"license"]stringByAppendingPathComponent:@"ms"] stringByAppendingPathComponent:@"ms_humanseg_small_v2.0.0.model"];

}

[NvsStreamingContext initHumanDetectionExt:segFilePath licenseFilePath:nil features:NvsHumanDetectionFeature_Background];

// If you want to use gesture recognition, include this model

NSString *handActionPath = [[[bundlePath stringByAppendingPathComponent:@"license"]stringByAppendingPathComponent:@"ms"] stringByAppendingPathComponent:@"ms_hand_v2.0.0.model"];

[NvsStreamingContext initHumanDetectionExt:handActionPath licenseFilePath:nil features:NvsHumanDetectionFeature_HandAction|NvsHumanDetectionFeature_HandLandmark];

// If you want to use eyeball detection, include this model

NSString *eyeballPath = [[[bundlePath stringByAppendingPathComponent:@"license"]stringByAppendingPathComponent:@"ms"] stringByAppendingPathComponent:@"ms_eyecontour_v2.0.0.model"];

[NvsStreamingContext initHumanDetectionExt:eyeballPath licenseFilePath:nil features:NvsHumanDetectionFeature_EyeballLandmark|NvsHumanDetectionFeature_SemiImageMode];

// If you want to use the fake face function (like face stickers/mask effects), include this model

NSString *fakefacePath = [[[bundlePath stringByAppendingPathComponent:@"license"]stringByAppendingPathComponent:@"ms"] stringByAppendingPathComponent:@"fakeface_v1.0.1.dat"];

[NvsStreamingContext setupHumanDetectionData:NvsHumanDetectionDataType_FakeFace dataFilePath:fakefacePath];

// If you want to use the avatar function, include this model

NSString *avatarFilePath = [[[bundlePath stringByAppendingPathComponent:@"license"]stringByAppendingPathComponent:@"ms"] stringByAppendingPathComponent:@"ms_avatar_v2.0.0.model"];

[NvsStreamingContext initHumanDetectionExt:avatarFilePath licenseFilePath:nil features:NvsHumanDetectionFeature_AvatarExpression];

/// Advanced Beauty model initialization

NSString *advancedbeautyPath = [[[bundlePath stringByAppendingPathComponent:@"license"]stringByAppendingPathComponent:@"ms"] stringByAppendingPathComponent:@"advancedbeauty_v1.0.1.dat"];

[NvsStreamingContext setupHumanDetectionData:NvsHumanDetectionDataType_AdvancedBeauty dataFilePath:advancedbeautyPath];

}2.2 Android License Usage Method

Meishe SDK licensing method:

String licensePath = "assets:/meishesdk.lic";

mStreamingContext = NvsStreamingContext.init(getApplicationContext(), licensePath, NvsStreamingContext.STREAMING_CONTEXT_FLAG_SUPPORT_4K_EDIT);

Information required for facial recognition licensing:

The code is as follows, execute the authorization only once.

/**

* Face model initialization. The basic face and face common model are mandatory, others are optional as needed.

* The paths are not fixed; just pass the correct path.

* The following code is from the SDK demo file: com.meishe.sdkdemo.MeicamContextWrap#initArSceneEffect

*/

// Mandatory: Basic face model file

String modelPath = "assets:/facemode/ms/" + FACE_106_MODEL;

String licensePath = "";

if (BuildConfig.FACE_MODEL == 240) {

modelPath = "assets:/facemode/ms/" + FACE_240_MODEL;

}

boolean initSuccess = NvsStreamingContext.initHumanDetection(MSApplication.getContext(),

modelPath, licensePath,

NvsStreamingContext.HUMAN_DETECTION_FEATURE_FACE_LANDMARK |

NvsStreamingContext.HUMAN_DETECTION_FEATURE_FACE_ACTION |

NvsStreamingContext.HUMAN_DETECTION_FEATURE_SEMI_IMAGE_MODE);

/*

* Corresponding part for the Effect SDK (can be omitted if not used)

*/

NvsEffectSdkContext.initHumanDetection(MSApplication.getContext(),

modelPath, licensePath,

NvsStreamingContext.HUMAN_DETECTION_FEATURE_FACE_LANDMARK |

NvsStreamingContext.HUMAN_DETECTION_FEATURE_FACE_ACTION |

NvsStreamingContext.HUMAN_DETECTION_FEATURE_SEMI_IMAGE_MODE);

//----------------------------------------------------------------------------------------------------

/*

* Mandatory: Face common model initialization

*/

String faceCommonPath = "assets:/facemode/common/" + FACE_COMMON_DAT;

boolean faceCommonSuccess = NvsStreamingContext.setupHumanDetectionData(NvsStreamingContext.HUMAN_DETECTION_DATA_TYPE_FACE_COMMON, faceCommonPath);

/*

* Corresponding part for the Effect SDK (can be omitted if not used)

*/

NvsEffectSdkContext.setupHumanDetectionData(NvsEffectSdkContext.HUMAN_DETECTION_DATA_TYPE_FACE_COMMON, faceCommonPath);

//----------------------------------------------------------------------------------------------------

/*

* Advanced beauty model initialization

*/

String advancedBeautyPath = "assets:/facemode/common/" + ADVANCED_BEAUTY_DAT;

boolean advancedBeautySuccess = NvsStreamingContext.setupHumanDetectionData(NvsStreamingContext.HUMAN_DETECTION_DATA_TYPE_ADVANCED_BEAUTY, advancedBeautyPath);

/*

* Corresponding part for the Effect SDK (can be omitted if not used)

*/

NvsEffectSdkContext.setupHumanDetectionData(NvsEffectSdkContext.HUMAN_DETECTION_DATA_TYPE_ADVANCED_BEAUTY, advancedBeautyPath);

//----------------------------------------------------------------------------------------------------

/*

* Fake face model initialization, similar to mask effects, etc., the special effects only follow the movement of the face

*/

String fakeFacePath = "assets:/facemode/common/" + FAKE_FACE_DAT;

boolean fakefaceSuccess = NvsStreamingContext.setupHumanDetectionData(NvsStreamingContext.HUMAN_DETECTION_DATA_TYPE_FAKE_FACE, fakeFacePath);

/*

* Corresponding part for the Effect SDK (can be omitted if not used)

*/

NvsEffectSdkContext.setupHumanDetectionData(NvsStreamingContext.HUMAN_DETECTION_DATA_TYPE_FAKE_FACE, fakeFacePath);

//----------------------------------------------------------------------------------------------------

/*

* Portrait Background Segmentation Model

*/

String segPath = "assets:/facemode/common/" + HUMAN_SEG_MODEL;

if (null != NvsStreamingContext.getInstance()) {

int level = NvsStreamingContext.getInstance().getDeviceCpuLevel();

Logger.e(TAG, "MS CPU level:-->" + level);

if (level == NvsStreamingContext.DEVICE_POWER_LEVEL_LOW) {

segPath = "assets:/facemode/common/" + HUMAN_SEG_LEVEL_LOW_MODEL;

}

}

boolean segSuccess = NvsStreamingContext.initHumanDetectionExt(MSApplication.getContext(),

segPath, null, NvsStreamingContext.HUMAN_DETECTION_FEATURE_SEGMENTATION_BACKGROUND);

/*

* Corresponding part for the Effect SDK (can be omitted if not used)

*/

NvsEffectSdkContext.initHumanDetectionExt(MSApplication.getContext(),

segPath, null, NvsStreamingContext.HUMAN_DETECTION_FEATURE_SEGMENTATION_BACKGROUND);

//----------------------------------------------------------------------------------------------------

/*

* Gesture point model, effects like heart gesture will use this model

*/

String handPath = "assets:/facemode/common/" + HAND_MODEL;

boolean handSuccess = NvsStreamingContext.initHumanDetectionExt(MSApplication.getContext(), handPath, null,

NvsStreamingContext.HUMAN_DETECTION_FEATURE_HAND_LANDMARK | NvsStreamingContext.HUMAN_DETECTION_FEATURE_HAND_ACTION);

/*

* Corresponding part for the Effect SDK (can be omitted if not used)

*/

NvsEffectSdkContext.initHumanDetectionExt(MSApplication.getContext(), handPath, null,

NvsStreamingContext.HUMAN_DETECTION_FEATURE_HAND_LANDMARK | NvsStreamingContext.HUMAN_DETECTION_FEATURE_HAND_ACTION);

//----------------------------------------------------------------------------------------------------

/*

* Eyeball model initialization, used for the beauty option 'Colored Contacts' (Mei Tong). Initialization can be skipped if not using colored contacts.

*/

String eyeBallModelPath = "assets:/facemode/common/" + EYE_CONTOUR_MODEL;

boolean eyeBallSuccess = NvsStreamingContext.initHumanDetectionExt(MSApplication.getContext(), eyeBallModelPath, null

, NvsStreamingContext.HUMAN_DETECTION_FEATURE_EYEBALL_LANDMARK | NvsStreamingContext.HUMAN_DETECTION_FEATURE_SEMI_IMAGE_MODE);

/*

* Corresponding part for the Effect SDK (can be omitted if not used)

*/

NvsEffectSdkContext.initHumanDetectionExt(MSApplication.getContext(),

eyeBallModelPath, null, NvsEffectSdkContext.HUMAN_DETECTION_FEATURE_EYEBALL_LANDMARK);

//----------------------------------------------------------------------------------------------------

/*

* Avatar model initialization, effects like little fox, etc., this model will be used for特效 categories that animate based on facial expressions

*/

modelPath = "assets:/facemode/common/" + AVATAR_MODEL;

boolean avatarSuccess = NvsStreamingContext.initHumanDetectionExt(MSApplication.getContext(),

modelPath,

null,

NvsStreamingContext.HUMAN_DETECTION_FEATURE_AVATAR_EXPRESSION);

/*

* Corresponding part for the Effect SDK (can be omitted if not used)

*/

NvsEffectSdkContext.initHumanDetectionExt(MSApplication.getContext(),

modelPath,

null,

NvsStreamingContext.HUMAN_DETECTION_FEATURE_AVATAR_EXPRESSION);