美摄SDK授权说明

StreamingSDK在测试过程中也可以不使用授权,只不过画面上会有水印。

EffectSDK在测试阶段就需要使用sdk测试授权,及素材授权,否则效果不生效。

一、SDK授权申请流程:

- 打开www.meishesdk.com官网,注册用户

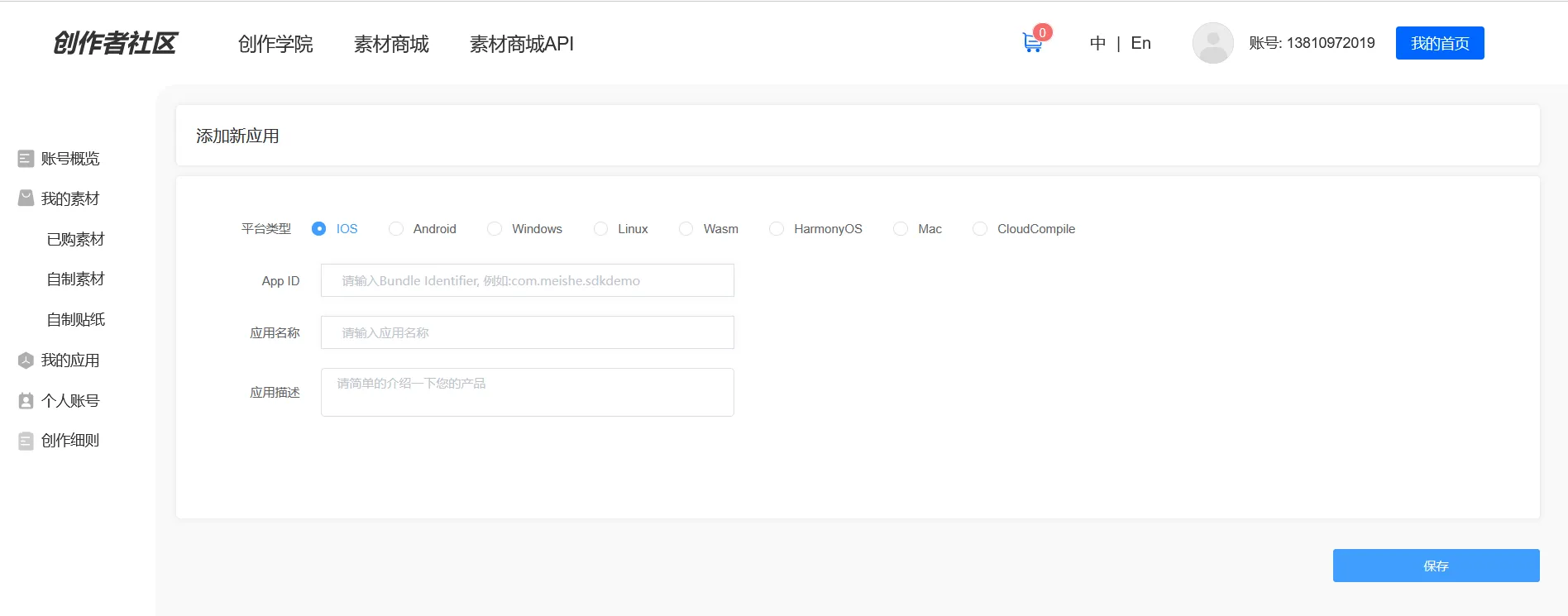

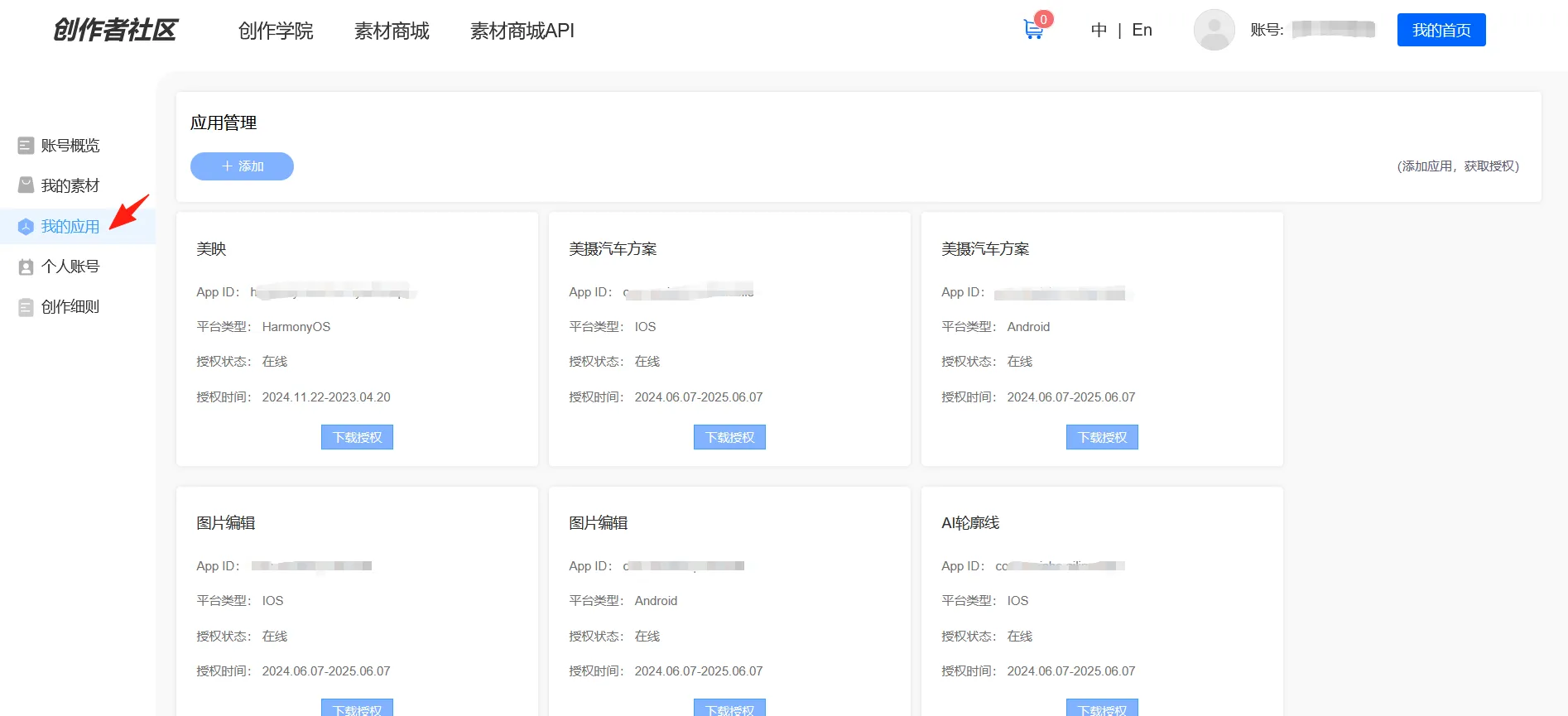

- 进入用户后台,在应用管理中创建一个产品,并填写产品的bundle id和package name(如果后续是要作为正式授权的应用,请使用业务主账号,避免使用个人账号,避免后续迁移麻烦)

3. 联系商务人员进行授权,主要依据用户需求的SDK功能进行授权。

授权后在刚才创建的产品进行下载即可

4、获得SDK包:在www.meishesdk.com网站上可以获得最新美摄SDK包,如果需要人脸功能,请下载带人脸sdk包

二、各端sdk授权的使用方式

*首先,sdk的授权文件,不能用于素材授权

2.1 iOS授权的使用方法

美摄sdk的授权方式

objectivec

NSString *licPath = [[[NSBundle mainBundle] bundlePath] stringByAppendingPathComponent:@"license/meishesdk.lic"];

BOOL result = [NvsStreamingContext verifySdkLicenseFile:licPath];

// 如果不需要支持4K或者没有购买这个授权则不需要加这个flag,NvsStreamingContextFlag_Support4KEdit

[NvsStreamingContext sharedInstanceWithFlags:NvsStreamingContextFlag_Support4KEdit];

if (!result) {

NSLog(@"美摄SDK授权失败!");

}人脸授权需要3个信息:

license的路径

model的路径

facecommon.dat的路径

代码如下,授权执行一次就行

objectivec

NSString *bundleid = [[NSBundle mainBundle] bundleIdentifier];

NSString *licPath = nil;

NSString *bundlePath = [[NSBundle mainBundle] pathForResource:@"license" ofType:@"bundle"];

// 这里model要从sdkdemo copy过来,sdkdemo永远是最新的模型,模型路径要对

NSString *modelPath = [[[bundlePath stringByAppendingPathComponent:@"license"] stringByAppendingPathComponent:@"ms"] stringByAppendingPathComponent:@"ms_face106_v3.0.0.model"];

if (model == NvFaceMode_240){

modelPath = [[[bundlePath stringByAppendingPathComponent:@"license"] stringByAppendingPathComponent:@"ms"] stringByAppendingPathComponent:@"ms_face240_v3.0.1.model"];

}

// 判断sdk是否包含人脸功能(并不代表你有这个授权)

int type = [NvsStreamingContext hasARModule];

if (type > 0) {

isInitArFaceSuccess = [NvsStreamingContext initHumanDetection:modelPath licenseFilePath:licPath features:NvsHumanDetectionFeature_FaceLandmark|NvsHumanDetectionFeature_FaceAction | NvsHumanDetectionFeature_SemiImageMode];

if(isInitArFaceSuccess) {

NSLog(@"初始化人脸成功!!!!! Initialize face successfully !!!!!");

}else{

NSLog(@"初始化人脸失败!!!!! Initialize face Failed !!!!!");

}

/// 人脸通用模型初始化,使用人脸功能的时候需要初始化该模型

/// Initialize the general face model. This model needs to be initialized when using the face function.

NSString *facecommonPath = [[[bundlePath stringByAppendingPathComponent:@"license"]stringByAppendingPathComponent:@"ms"] stringByAppendingPathComponent:@"facecommon_v1.0.1.dat"];

[NvsStreamingContext setupHumanDetectionData:NvsHumanDetectionDataType_FaceCommon dataFilePath:facecommonPath];

// 如果你想使用分割功能请包含这个模型,手机差点的老手机,请用small版本。

NSString *segFilePath = [[[bundlePath stringByAppendingPathComponent:@"license"]stringByAppendingPathComponent:@"ms"] stringByAppendingPathComponent:@"ms_humanseg_medium_v2.0.0.model"];

BOOL highVersion = [NvInitArScence isHighVersionPhone];

if (highVersion == NO) {

segFilePath = [[[bundlePath stringByAppendingPathComponent:@"license"]stringByAppendingPathComponent:@"ms"] stringByAppendingPathComponent:@"ms_humanseg_small_v2.0.0.model"];

}

[NvsStreamingContext initHumanDetectionExt:segFilePath licenseFilePath:nil features:NvsHumanDetectionFeature_Background];

// 如果你想使用手势识别功能请包含这个模型

NSString *handActionPath = [[[bundlePath stringByAppendingPathComponent:@"license"]stringByAppendingPathComponent:@"ms"] stringByAppendingPathComponent:@"ms_hand_v2.0.0.model"];

[NvsStreamingContext initHumanDetectionExt:handActionPath licenseFilePath:nil features:NvsHumanDetectionFeature_HandAction|NvsHumanDetectionFeature_HandLandmark];

// 如果你想使用眼球检测功能请包含这个模型

NSString *eyeballPath = [[[bundlePath stringByAppendingPathComponent:@"license"]stringByAppendingPathComponent:@"ms"] stringByAppendingPathComponent:@"ms_eyecontour_v2.0.0.model"];

[NvsStreamingContext initHumanDetectionExt:eyeballPath licenseFilePath:nil features:NvsHumanDetectionFeature_EyeballLandmark|NvsHumanDetectionFeature_SemiImageMode];

// 如果你想使用假脸(像贴脸带面具的那种效果)功能请包含这个模型

NSString *fakefacePath = [[[bundlePath stringByAppendingPathComponent:@"license"]stringByAppendingPathComponent:@"ms"] stringByAppendingPathComponent:@"fakeface_v1.0.1.dat"];

[NvsStreamingContext setupHumanDetectionData:NvsHumanDetectionDataType_FakeFace dataFilePath:fakefacePath];

// 如果你想使用avatar功能请包含这个模型

NSString *avatarFilePath = [[[bundlePath stringByAppendingPathComponent:@"license"]stringByAppendingPathComponent:@"ms"] stringByAppendingPathComponent:@"ms_avatar_v2.0.0.model"];

[NvsStreamingContext initHumanDetectionExt:avatarFilePath licenseFilePath:nil features:NvsHumanDetectionFeature_AvatarExpression];

/// 高级美颜模型初始化

/// Advanced Beauty model initialization

NSString *advancedbeautyPath = [[[bundlePath stringByAppendingPathComponent:@"license"]stringByAppendingPathComponent:@"ms"] stringByAppendingPathComponent:@"advancedbeauty_v1.0.1.dat"];

[NvsStreamingContext setupHumanDetectionData:NvsHumanDetectionDataType_AdvancedBeauty dataFilePath:advancedbeautyPath];

}2.2 Android授权的使用方法

美摄sdk的授权方式

objectivec

String licensePath = "assets:/meishesdk.lic";

mStreamingContext = NvsStreamingContext.init(getApplicationContext(), licensePath, NvsStreamingContext.STREAMING_CONTEXT_FLAG_SUPPORT_4K_EDIT);人脸授权需要的信息:

代码如下,授权执行一次就行

java

/**

* 人脸模型初始化,基础人脸和人脸通用模型必须,其他按需即可。

* 路径不是固定的,传入正确的路径即可

* 下文代码来自SDKdemo文件中com.meishe.sdkdemo.MeicamContextWrap#initArSceneEffect

*/

//必须:基础人脸模型文件 Basic face model file

String modelPath = "assets:/facemode/ms/" + FACE_106_MODEL;

String licensePath = "";

if (BuildConfig.FACE_MODEL == 240) {

modelPath = "assets:/facemode/ms/" + FACE_240_MODEL;

}

boolean initSuccess = NvsStreamingContext.initHumanDetection(MSApplication.getContext(),

modelPath, licensePath,

NvsStreamingContext.HUMAN_DETECTION_FEATURE_FACE_LANDMARK |

NvsStreamingContext.HUMAN_DETECTION_FEATURE_FACE_ACTION |

NvsStreamingContext.HUMAN_DETECTION_FEATURE_SEMI_IMAGE_MODE);

/*

* 对应的effect sdk的部分(如果没用到可以不填加)

* The part of the corresponding effect sdk (if not used, you can leave it blank)

*/

NvsEffectSdkContext.initHumanDetection(MSApplication.getContext(),

modelPath, licensePath,

NvsStreamingContext.HUMAN_DETECTION_FEATURE_FACE_LANDMARK |

NvsStreamingContext.HUMAN_DETECTION_FEATURE_FACE_ACTION |

NvsStreamingContext.HUMAN_DETECTION_FEATURE_SEMI_IMAGE_MODE);

//--------------------------------------------------------------------------------------------------

/*

* 必须:人脸通用模型初始化

*The face common model is initialized

*/

String faceCommonPath = "assets:/facemode/common/" + FACE_COMMON_DAT;

boolean faceCommonSuccess = NvsStreamingContext.setupHumanDetectionData(NvsStreamingContext.HUMAN_DETECTION_DATA_TYPE_FACE_COMMON, faceCommonPath);

/*

* 对应的effect sdk的部分(如果没用到可以不填加)

* The part of the corresponding effect sdk (if not used, you can leave it blank)

*/

NvsEffectSdkContext.setupHumanDetectionData(NvsEffectSdkContext.HUMAN_DETECTION_DATA_TYPE_FACE_COMMON, faceCommonPath);

//--------------------------------------------------------------------------------------------------

/*

* 美颜,美妆模型初始化

*The advanced beauty model is initialized

*/

String advancedBeautyPath = "assets:/facemode/common/" + ADVANCED_BEAUTY_DAT;

boolean advancedBeautySuccess = NvsStreamingContext.setupHumanDetectionData(NvsStreamingContext.HUMAN_DETECTION_DATA_TYPE_ADVANCED_BEAUTY, advancedBeautyPath);

/*

* 对应的effect sdk的部分(如果没用到可以不填加)

* The part of the corresponding effect sdk (if not used, you can leave it blank)

*/

NvsEffectSdkContext.setupHumanDetectionData(NvsEffectSdkContext.HUMAN_DETECTION_DATA_TYPE_ADVANCED_BEAUTY, advancedBeautyPath);

//--------------------------------------------------------------------------------------------------

/*

* 假脸模型初始化,类似面具效果等,特效只跟随脸部动

* Fake face model initialization, similar to mask effects, etc., the special effects only follow the movement of the face

*/

String fakeFacePath = "assets:/facemode/common/" + FAKE_FACE_DAT;

boolean fakefaceSuccess = NvsStreamingContext.setupHumanDetectionData(NvsStreamingContext.HUMAN_DETECTION_DATA_TYPE_FAKE_FACE, fakeFacePath);

/*

* 对应的effect sdk的部分(如果没用到可以不填加)

* The part of the corresponding effect sdk (if not used, you can leave it blank)

*/

NvsEffectSdkContext.setupHumanDetectionData(NvsStreamingContext.HUMAN_DETECTION_DATA_TYPE_FAKE_FACE, fakeFacePath);

//--------------------------------------------------------------------------------------------------

/*

* 人像背景分割模型

* Portrait Background Segmentation Model

*/

String segPath = "assets:/facemode/common/" + HUMAN_SEG_MODEL;

if (null != NvsStreamingContext.getInstance()) {

int level = NvsStreamingContext.getInstance().getDeviceCpuLevel();

Logger.e(TAG, "MS CPU level:-->" + level);

if (level == NvsStreamingContext.DEVICE_POWER_LEVEL_LOW) {

segPath = "assets:/facemode/common/" + HUMAN_SEG_LEVEL_LOW_MODEL;

}

}

boolean segSuccess = NvsStreamingContext.initHumanDetectionExt(MSApplication.getContext(),

segPath, null, NvsStreamingContext.HUMAN_DETECTION_FEATURE_SEGMENTATION_BACKGROUND);

/*

* 对应的effect sdk的部分(如果没用到可以不填加)

* The part of the corresponding effect sdk (if not used, you can leave it blank)

*/

NvsEffectSdkContext.initHumanDetectionExt(MSApplication.getContext(),

segPath, null, NvsStreamingContext.HUMAN_DETECTION_FEATURE_SEGMENTATION_BACKGROUND);

//--------------------------------------------------------------------------------------------------

/*

* 手势点位模型,比心等效果会使用到这个模型

* Gesture point model, heart and other effects will use this model

*/

String handPath = "assets:/facemode/common/" + HAND_MODEL;

boolean handSuccess = NvsStreamingContext.initHumanDetectionExt(MSApplication.getContext(), handPath, null,

NvsStreamingContext.HUMAN_DETECTION_FEATURE_HAND_LANDMARK

| NvsStreamingContext.HUMAN_DETECTION_FEATURE_HAND_ACTION);

/*

* 对应的effect sdk的部分(如果没用到可以不填加)

* The part of the corresponding effect sdk (if not used, you can leave it blank)

*/

NvsEffectSdkContext.initHumanDetectionExt(MSApplication.getContext(), handPath, null,

NvsStreamingContext.HUMAN_DETECTION_FEATURE_HAND_LANDMARK

| NvsStreamingContext.HUMAN_DETECTION_FEATURE_HAND_ACTION);

//--------------------------------------------------------------------------------------------------

/*

* 眼球模型初始化 , 作用在美妆的 美瞳选项 ,不用美妆美瞳 可以不做初始化

*/

String eyeBallModelPath = "assets:/facemode/common/" + EYE_CONTOUR_MODEL;

boolean eyeBallSuccess = NvsStreamingContext.initHumanDetectionExt(MSApplication.getContext(), eyeBallModelPath, null

, NvsStreamingContext.HUMAN_DETECTION_FEATURE_EYEBALL_LANDMARK | NvsStreamingContext.HUMAN_DETECTION_FEATURE_SEMI_IMAGE_MODE);

/*

* 对应的effect sdk的部分(如果没用到可以不填加)

* The part of the corresponding effect sdk (if not used, you can leave it blank)

*/

NvsEffectSdkContext.initHumanDetectionExt(MSApplication.getContext(),

eyeBallModelPath, null, NvsEffectSdkContext.HUMAN_DETECTION_FEATURE_EYEBALL_LANDMARK);

//--------------------------------------------------------------------------------------------------

/*

* avatar模型初始化,小狐狸效果等,根据表情动的特效果类别会使用这个模型

* avatar model initialization, little fox effect, etc.,

* this model will be used according to the special effect category of facial expressions

*/

modelPath = "assets:/facemode/common/" + AVATAR_MODEL;

boolean avatarSuccess = NvsStreamingContext.initHumanDetectionExt(MSApplication.getContext(),

modelPath,

null,

NvsStreamingContext.HUMAN_DETECTION_FEATURE_AVATAR_EXPRESSION);

/*

* 对应的effect sdk的部分(如果没用到可以不填加)

* The part of the corresponding effect sdk (if not used, you can leave it blank)

*/

NvsEffectSdkContext.initHumanDetectionExt(MSApplication.getContext(),

modelPath,

null,

NvsStreamingContext.HUMAN_DETECTION_FEATURE_AVATAR_EXPRESSION);